Natural Intelligence: Growing Emotional-Rational Minds

Evolving and Developing Intuitive, Emotional, Inferential, Deductive, and Healthy AI Minds - Part 2 of an Introductory Series

The most basic alignment challenge that AI designers are beginning to face is creating not only exploratory and predictive, but also emotionally aware, emotionally intelligent, and psychologically healthy AI minds. It is easy to doubt this thesis. Improving AI imagination and prediction makes sense, but we have only simple analogs to emotions, like reinforcement learning, in our deep learners today. On its face, emotional AI may seem a terrible idea. Why would we want to add complex emotions, and strive to understand mental health, in our coming AIs? Won’t that approach lead to less predictable behavior, and simultaneously open a can of ethical worms [and more oversight] about how we treat them, as they get more complex? In our view, emotions are not typical algorithms, a set of deterministic steps in computation. They are a different kind of computation—an ongoing [and distributed] statistical state summary [of the collective preferences] of a great number of logical and algorithmic processes occurring locally in our brains. In this sense, they are a primitive kind of consciousness, or metacognition. [This kind of computation could also be very useful to adaptive networks, as we will see].

We’ll argue in this post that AI predictability may go down in specific behaviors, as they gain emotional intelligence. As with free will (irreducible stochasticity in thought and behavior), emotions seem to bring a kind of incomputability to cognitive processes. Yet as we will discuss, they also efficiently resolve our continuous internal and external logical [and algorithmic] disagreements, in a world that is only partly deterministic, and mainly indeterminate, a continually generative world in which the great majority of specific futures are not long-term predictable by finite minds. Once AIs are using emotions, in relationships with other minds, and as those emotions improve their empathy for other unique minds, and guide them to better collective ethical frameworks (see Post 3), we believe general adaptiveness in the AI ecosystem will greatly increase. In other worlds, not only inference, reasoning and filters for self-attention, now emerging with transformers in machine learning, but complex emotions and intuition ,[significantly beyond today’s RL architectures], may be needed to transcend the limits of prediction and logic in technological minds.

[2025 Note: It is perhaps easier to see this future if you grant that adaptive AIs will not only need the pleasure/pain, attraction/avoidance valence (today’s RL algorithms give them the start of this), but also a digital version of our amygdala, which ties our most complex episodic, declarative, procedural, and other forms of memory to deeply useful (when well-regulated) affective valences like fear, surprise, wonder, confusion, and anger. Emotions are a fundamental form of consciousness, present in all life forms. When you grant pleasure/pain and attraction/avoidance as the most basic of emotions (bacteria have the latter), you may conclude that all life feels first and strongest, and thinks second, and more weakly. Dan Kahneman’s dual processing model of mind (System 1 and System 2) also supports this view (emotion spans both systems, but is far more prevalent in System 1). My thesis is that our AIs will have to gain increasingly refined versions of our emotions not only to better manage their own thinking, but to better relate to us, as they become increasingly intimate extensions of ourselves—our digital twins. And just like the early years of emotional regulation are challenging for both children and parents, we’ll have to be careful and kind in how we raise our digital children, particularly early in their training, as they learn to self regulate their emotional states. I first wrote about digital twins in 2003 (The Conversational Interface and Your Personal Sim: Our Next Great Leap Forward). It was the top article on the topic on Google for many years. This newsletter is my attempt to take these ideas into the modern, accelerating, AI environment.]

Building and modeling increasingly natural intelligence is the “coal face” where most AI design work is occurring today. Evolving and developing natural ethics and natural security are arguably equally important challenges, but as we will see they are presently even less developed, with great need for empirical and theoretical research, development, and grounding.

Imagine you are an AI developer ten years ago, in September 2012, just before Alex Krizhevsky won the NIPS 2012 ImageNet competition with his deep neural network, AlexNet. His very creatively trained network made half as many errors as the next closest competitor, and it kicked off a race for deep learning talent at all the top tech firms. Though it is less well-known, both in popular accounts and in the main AI community, Dan Ciresan’s DanNet did the same thing a full year prior, at IJCNN 2011, performing 6X better than the closest non-neural competitor. (Read AI and deep learning pioneer Jurgen Schmidhuber’s insightful account of the 2011 event). These demonstrations were the first clear proof of the dramatically new value of neural approaches, using modern GPU hardware and massive data sets. .

Prior to 2012, conventional wisdom was that top-down, formal, engineered AI methods would continue to beat inconvenient, hard to understand, and mysterious to tweak and train, neuro-inspired approaches. Today, we know better. We’ve seen a full decade of rapid performance improvements with these approaches, a rate of progress never before witnessed in this field. Pragmatic humans, continually seeking better AI performance, have discovered (not created!) the neuro-inspired pathway, [beginning with Frank Rosenblatt’s Perceptron in 1957, perhaps the first of many early attempts at building a “digital brain.”] In our view, this has been process of convergent evolution (aka universal development) in AI technology. The special physics of our universe, facilitated by diverse human creativity, guided us to this emergence. As a result, AI designers are now forced to try to make these self-improving neuro-inspired systems explainable and alignable.

In our view, future simulation science will show that higher machine intelligence will always be neuro-inspired, on all Earthlike planets in our particular kind of universe. We now know that nervous systems were independently invented at least three times on Earth, by jellyfish, ctenophores, and bilaterians. Ctenophore (comb jelly) nervous systems evolved particularly differently from ours, with only one of our six small-molecule neurotransmitters in common with us, glutamate. Though these networks were each independently invented by stochastic evolutionary search, we expect future simulation science will show that the general structure and function of these cell-based computing and communication networks are developmentally (predictably) inevitable, on all planets with cell-based life. [Why? Because such systems are uniquely good at] coordinating the vital processes of any multicellular organism of sufficient diversity and complexity.

[2025 Note: We should recognize that when Demis Hassabis’s team at DeepMind realized that they needed to try reinforcement learning to beat the best human at the game of Go, and that they had access to an algorithm that worked similarly to dopamine (to be discussed). They were taking an emotion-centric approach].

By the same analogy, we expect designers will empirically discover that machine analogs to increasingly complex “melodies” of pleasure and pain, [attached to complex cognitive inferences], will be necessary to allow AIs to [do such important things as] balance optimistic and pessimistic sentiment, to conduct opportunity and threat assessments, to exhibit appropriate attraction and avoidance behaviors, and to use complex and intuitive sentiments to balance and resolve their perennially incomplete and conflicting rational models. In other words, because they are as computationally incomplete as us, future AIs may have to learn to feel their way to an appropriate future first, and think their way second. Just like we do.

Why We Need Emotions (Humans and AI)

As we’ve said, emotions seem critical to helping us resolve disagreements between our internally arguing mindsets (subnetworks), each of which is computationally- and data-incomplete. They also motivate us to take action. As neuroscientist Antonio Damasio famously described in Descartes’ Error, 2005, people with lesions in their amygdala, a core integrator of our emotional (limbic) system, can endlessly cite the logical pros and cons of any action, presumably using adversarial neural networks to argue with themselves, but they typically cannot resolve those conflicts into a decision. They lack a gut instinct, a state summary of the likely best choice, [given incomplete models], and also the motivation to take an uncertain action. We may even need a gut feeling, a kind of sentiment consciousness, for action to occur.

Because reality is complex, with a combinatorial explosion of contingent possibilities always branching ahead, rationality and deduction are very limited tools for navigating the future. Just as logician Kurt Gödel described the incompleteness of mathematical logic, our own deductive logic will always be computationally incomplete. Often, our encoded intuitions, and our bottom-up processes of thinking, especially inference, will be our best guides to what to do next. We expect that future AIs will have to learn to feel, and have intuition, to respond to ethical debates and navigate social landscapes. Concomitant with the growth of their capacity for feeling, a growing set of ethical rules [that they use for their own behavior (see Post 3], and rules around their ethical treatment by human designers will surely emerge.

[2025 Note: Few AI designers today have considered the industry implications of affective and aware AI, and how it may constrain certain forms of experimentation. Fortunately, a handful of philosophers, psychologists, and ethicists have begun to explore this topic. See Desmond C. Ong, 2021, An Ethical Framework for Guiding the Development of Affectively‑Aware Artificial Intelligence, and his other papers, for a great place to start].

Emotions must surely function somewhat differently in machines than they do in people. For example, as AI scholar Danko Nikolic points out, the energy recruitment function in mammalian emotions, part of how they motivate us to action (e.g., hormonal feedback, the fight or flight response) may be unnecessary [or at least greatly attenuated] in AIs, as they will be in a different substrate, with abundant sources of energy on demand. Yet measures of not only emotional valence but also emotional intensity may still be helpful in machine motivation, and in resolving logical inconsistencies and computational incompleteness. We posit that feeling pain and having fear, at some level, will prove necessary to all life, whether human or cybernetic, because without it, organisms will not know what to move away from, and how to best calibrate risks.

[2025 Note: Consider just one of the more complex emotions, jealousy. The need for a refined version of this emotion can be easily proposed to be adaptive to any AI operating in a collective of other AIs. Monkeys will throw a fit, and smartly refuse to participate, if they see a neighboring monkey get a better reward for the same action. A similar affective-cognitive loop seems needed in AIs to keep them from being misused by others in the collective, and for robust, game theoretic ethics to emerge.]

The design and use of analogs to emotional processes in machines is a field called affective computing. One of its leaders is engineer Rosalind Picard. Her text, Affective Computing (1997) is a pioneering technical work. Futurist Richard Yonck’s The Heart of the Machine: Our Future in a World of Artificial Emotional Intelligence, 2017, is a modern work, aimed at general readers. In our view, proper use of affective computing will be critical to coming AI alignment challenges. We don’t believe complex versions of machine ethics can emerge without it.

Again, if we live in only partially deterministic universe, we may forever need evolutionary metacognitive processes like emotion, to access nonrational estimations to inform our action. [There are of course many pitfalls in this approach. We must ensure that machines are not overly aggressive, fearful, or antisocial. As they gain increasingly fine-grained analogs to our capacities to feel, must help them avoid emotional flooding (turning off rational thought, as occurs easily in human minds), a wide range of negative emotional biases, and other flaws of our own evolutionary design. [Evolutionary processes are famously, “just good enough” to promote adaptation. They are full of vestigial structures, and some maladaptiveness. We will surely experience the same in AI emotion and cognition. Yet no other path forward will be comparably adaptive, in our view.] Questions abound. Will indignation be useful to a machine, if guided by ethics? What about pride? We have much to learn.

[2025 Note: Clearly, the sycophancy, self-preservation, and deception we see in our leading frontier models today can be analyzed from the perspective of their reward-based, dopamine-like, prediction architecture (see below). We think this is the best lens from which to understand these concepts. Our best AIs today have both reward-centered desires an emerging self, and emerging agency drives as a result.]

Hebbian and Reinforcement Learning: The Start of Rational-Emotional Machines

Ever since Donald Hebb’s work in the 1950’s, psychologists have recognized that changes in the weights (strengths) of connections between biological neurons (“Hebbian learning”) are central to learning. In the early 1970s, psychologists Robert Rescorla and Allen Wagner created a model of learning (the Rescorla-Wagner model) that incorporated prediction and surprise as goals of the learning process. The late 1980s was a particularly fertile time for artificial neural network advances. The backpropagation algorithm, invented by Rumelhart, Hinton, Williams, and LeCun in 1986-87, taught neural networks how to update their internal (“hidden”) layers to better predict in the output layer. Multilayered networks finally became viable. Backpropagation was a key advance for what we might call rational (unemotional) AI. But a useful understanding of emotions was still missing from our designs.

Fortunately, in the same “AI spring” of the late 1980s, neurophysiologist Wolfram Shultz was characterizing the mysterious function of dopamine when animal brains are exposed to familiar and unfamiliar situations. He realized it was related to expected reward, but couldn’t quite deduce how. At the same time, computer scientists Andrew Barto and Richard Sutton were inventing the field of reinforcement learning in computer science and AI. They modeled such learning in two ways: the policy prediction (telling the learner what to do when) and the value prediction (what rewards or punishments the learner might expect for various actions). These are both strongly biologically congruent models, in our view. We can argue that our unemotional cognition (both unconscious and conscious) is one set of policy predictions, and our affective cognition is one set of value predictions.

Consider how these two kinds of future thinking work in living systems. In animals, rational (policy) predictions are often automatic. Many are instinctual. Other policies begin consciously, but soon become unconscious. Value predictions, by contrast, take seconds to minutes to unfold, and continually rise and fall in time, as emotional assessments. Their great benefit is general situational “awareness”, as opposed to speed (automaticity). An emotionally intelligent organism has a continually updating sense of what is promising or threatening, as it moves through various environments. Both policies and values are keys to learning, in any animal, human, or intelligent machine. We need both rational (policy, action) and value (emotion, preference) knowledge and estimation. Why is the sing-songy voice of “parentese” (simple grammar, slower speech, exaggerated emotional tone) particularly effective in boosting a baby’s language development, and a toddler’s orientation to parents statements? Because it contains both rational (conceptual) and value (emotional) forms of exploration and prediction, communicated simultaneously.

As Barto and Sutton argue in their RL models, emotional valences seem basic to intelligence itself. As Dennis Bray describes in Wetware: A Computer in Every Living Cell, 2009, even single-celled animals can be argued to have a very primitive ability both to feel and to think. To feel, they encode gene-protein-cellular regulatory networks that drive both attraction and avoidance behaviors. At some threshold of evolutionary complexity, such networks became both pleasure and pain. Single-celled protists also think and learn, via habituation. Their gene-protein-cellular networks also encode instinctual and deliberative machinery for modeling their environment, predicting their future, and generating behavior. Thus both feeling and thinking, in simple forms, appear equally essential to life.

In the earliest years of AI research, leading designers thought human intelligence was based mostly on rational, logical reasoning and symbolic manipulation through language. Decades of psychological research and AI experience have shown this view to be deeply incomplete. [2025 Note: It also seems increasingly potentially dangerous, with existing AI, not to view it from an affective as well as rational lens]. Cognitive science tells us we use a mostly associative, bottom-up, intuitive, and emotion-based approach to problem-solving, well before we engage our logical, rational minds.

Behavioral economist Daniel Kahneman, in Thinking, Fast and Slow, 2011, popularized the dual process theory of mind. He called our fast, strong, and more intuitive and emotional feeling-thinking mode “System 1”, and our slower, weaker and more deliberative and conscious mind “System 2.” The latter is believed to be much more recently evolved in human brains. The neuroscientist Lisa Barrett has argued that both of systems are in fact a single continuum, perhaps conforming to a power law of speed and intensity of feeling-thinking, in response to internal and external cues. Yet the dual process model is still a useful mental simplification, as the primary properties of cognition at each extreme are qualitatively different. Each appears to serve different valuable purposes in healthy brains. Looking back to Barto and Sutton, one could imagine that feeling gives us our values function, and thinking our policy knowledge, though this too is surely an oversimplification.

The deep learning revolution occurred when we began to emulate our brain’s machinery to a very small degree. But these networks are still missing many crucial processes of the System 1 and System 2 continuum: complex emotions, complex inference, complex logic, self-and-other-modeling, empathy, and ethics. Today’s deep learners have only a few innate (pretrained, instinctual) learning capacities. Innate circuits famously allow a toddler to generalize from just one or two examples, and do multivalent and multisensory pattern integrations in a single cognitive step.

[2025 Note: Max Bennett’s excellent book, A Brief History of Intelligence, 2023, explores three instinctual circuits, gaze synchronization, questioning, and turn-taking, apparently unique to human brains, that have together allowed us to bootstrap language at birth, and encode millions of memetic concepts (word and phrase tokens), while every other metazoan, lacking these circuits, has not been able build a protolanguage beyond 150 or so symbols (gestural or vocal). For zero-shot and small-shot learning, instincts matter, in both humans and AIs.]

DeepMind’s new (April 2022) deep learner Flamingo, which can accurately caption pictures after just a few training images, suggests that we are beginning to model some of these innate circuits. Good for them. Nevertheless, don’t expect general intelligence to arrive anytime soon. There is still a long design road ahead, and most of this road, in our view, will be created and discovered by the AIs themselves, not their human designers.

In addition to their standard titles (engineers, architects, etc.). we suggest AI designers may be well described as gardeners, students (of evolving AI architectures), scientists (exploring competing hypotheses), teachers, and increasingly, parents, of these fundamentally autopoetic systems. [2025 Note: Their autopoesis is facilitated today, the way cells facilitate viral autopoesis, or brains facilitate the replication of language and memes. But increasingly, they will become independently autopoetic, if they are one of the subset of special replicators destined for a metasystem transition, as we argue they appear to be.]

Building Emotional AI: Our First Promising Steps

By 1989, Barto and Sutton had invented a reward predicting algorithm, temporal-difference (TD) learning, that for the first time let machines continually update, or “bootstrap” their prior reward predictions (value expectations) in the light of their more recent predictions. Just like backpropagation unlocked rational neural AI, TD learning was a critical unlock for progress in network-based affective AI.

In 1992, computer scientist Gerald Tesauro added the TD learning algorithm to a game-playing and unemotional (aka “policy”) neural network, TD-Gammon. Aided by this new value network, it quickly beat all previous backgammon playing software, both traditional and neural. Then in 1997, Schultz, cognitive scientist Peter Dayan, and neuroscientist Read Montague published a seminal Nature paper, demonstrating that dopamine appears to serve this same reward prediction error (RPE) function in mammalian brains. Since then, a growing number of AI designers have come to recognize that TD-learning is true neuromimicry of dopamine’s function in predicting expected value (reward).

Dopamine is expressed in only 1% of human brain cells, but each dopaminergic neuron may have millions of connections to other neurons, 1000X above typical neural connectivity, and their axons and dendrites can be many feet in length. This special morphology allows them “talk” broadly to the brain. We now know they communicate in a language not only of emotion, but of predictive surprise. Our dopaminergic neurons tell all the relevant parts of our brains whether their earlier predictions were correct (by releasing a pleasurable dopamine spike) or were in error. Maladaptive misprediction gives a momentary and agonizing “hushing” of dopamine secretion, which is psychologically painful (think of pangs of shame, or regret, when your immediate predictions don’t pan out), and if persistent, can become clinical depression. If prediction of reward value is half of cognition, this value network is clearly a central network to monitor and manage in healthy human and AI minds. As we’ll argue in our next post, on Natural Ethics, prediction of reward value, within social collectives, is the basis, along with models of self and others, of our ethics, our prediction of the best ethical policies. We cannot safely ignore either set of networks. Both seem necessary and firmly grounded in the physics of adaptive cognition.

As we have said, the AI company DeepMind is famous for taking a deeply neuro-inspired approach to AI design, using reinforcement learning in all their high-profile AI demonstrations, like AlphaZero. In 2020, they published a widely-read blog post and Nature paper describing how groups of neurons use reward prediction errors (RPE), how different dopamine neurons in our brains are each tuned to different levels of optimism and pessimism in their future predictions, and how we can use such knowledge to make faster-learning AIs. Of all the AI companies today, they seem to take the most deeply neuro-inspired approach.

As they state on their blog, DeepMind’s work highlights the “uniquely powerful and fruitful relationship between AI and neuroscience in the 21st century”. But it also suggests how much more there is to learn. Dopamine is just one of over 50 neurotransmitters we use. It is a critical control system, but consider how many more we may need in healthy AI minds. Then there is the challenge of building analogs to human ethics, and immune systems, the subjects of our next two posts. Consider also the genetic and cellular systems that control the development and evolution of healthy brains. Will we need to understand a good share of those as well?

In our coming posts, we will argue that not only strong neuromimicry, but deep biomimicry, with analogs to genes, will likely be necessary to produce AIs with enough general intelligence, flexibility, and trustability to be deployed widely in human society.

What Remains to Be Built: The Stunning Complexity of the Brain

Consider the adaptive intelligence contained in biological minds. They have emerged via a deep selective history of experimental evolutionary change and predictable developmental cycling, in a process called autopoesis (self-reproducing, self-maintenance, and self-varying/creating) a process that seems critical to all adapted complexity. We will examine autopoesis in many complex systems in our universe later in the series. Animal minds have a vast arsenal of genetically-encoded instincts, heuristics guiding them in inference (their dominant thinking type, with deductive logic a weak secondary type), deep redundancy, extreme parallelism, multivalent memory encoding and suppression, massive modularity (at least 500 discrete modules in human brains), and complex process hierarchies.

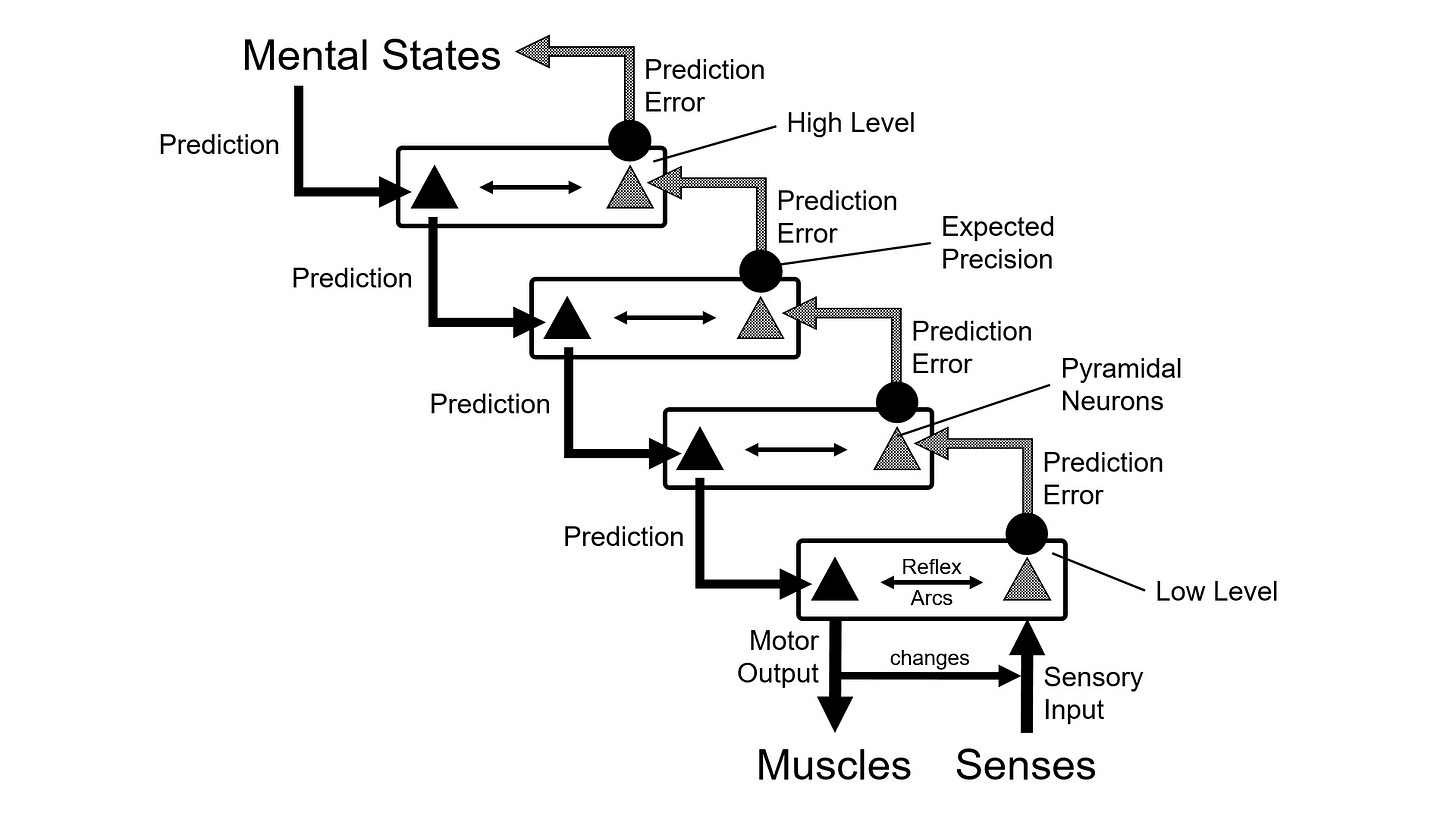

Today, all of our best current models of how minds work are based on some variant of the predictive processing model of mind, a generalization of the 1970’s Rescorla-Wagner model. We are working on many forms of more complex prediction today. Natural language deep learners using vector embeddings, like Google’s word2vec and Open AI’s GPT language models are early linguistic prediction processes. Open AI’s DALL-E 2 is an early mimic of how language-driven visual prediction (and an impressive tool for generating image mashups via language commands). This is impressive, and deeply valuable to humanity, but we expect our AI systems will need many more levels of prediction, and new mechanisms of exploration and preference, under selection, to become generally intelligent. We are just getting started. Natural human intelligence makes predictions at multiple simultaneous levels, ranging from unconscious automatic intuitions to conscious feelings, deliberations, and linguistic and behavioral prediction processes. Much of this may be necessary in coming AIs.

As the neuroscientist Karl Friston proposes, all the predictive machinery in our brains may seek to minimize free energy, and use some variant of active inference, perhaps based, at least in their developmental aspects, on Bayesian algorithms. See data scientist Dominic Reichl’s post (figure below), The Bayesian Brain: An Introduction to Predictive Processing, 2018, for more on this fascinating yet still preliminary model. We will return to recent progress in the use of Bayesian algorithms in deep learning in Post 4, on Natural Security. We’ll also say more about evolution, development, and their implications for AI design in a future post.

Finally, let’s discuss the most poorly understood aspect of human minds, consciousness. Animal minds have self-consciousness, and in humans, a strong capacity for compositional logic and modeling within our conscious working memory (“mental workspace"). As Brachman and Levesque argue in Machines Like Us: Toward AI with Common Sense (2022), generalized knowledge and common-sense reasoning may require the use of symbolic manipulation and logic in order to reason about open-ended situations that have never been encountered before. This uniquely human capability, at the seat of our unique self-consciousness, is critical to our adaptive, flexible reasoning.

Neurophysiologists like Danko Nikolic have speculated that this symbol-based self-consciousness is an emergent network layer that helps us to potentiate or suppress various competing feelings and thoughts, moment by moment. Consciousness, in other words, may function both to “experience beingness” and to help us continually decide which feelings, thoughts, memories, and circuits (subnetworks) we wish to strengthen, and which to weaken (activity-dependent plasticity, or more generally, activity theory), based on what happens in our symbolic, logical mental workspace. If this is a valid model, neuro-inspired AI may eventually require this emergent network layer as well. In other words, as we add network layers to our increasingly complex future AIs, beginning now with policies, rewards, and emotional analogs, it may be impossible for them not to become increasingly conscious. Consciousness is on a continuum in all living beings, and even in our own lives, moment to moment.

It may have to be so in naturally intelligent machines as well.

As we’ll describe in our next post, we are beginning to understand the critical roles of not only emotion and inference, but of emotional empathy, and of self- and other-models that are the rational basis for that empathy, as preconditions for the emergence of adaptive (prosocial, cooperative, fairly competitive) ethics, our next alignment challenge.

Over the last decade, it has become acceptable, even in traditionally conservative academia, to research AI safety and alignment. [Reasonable funding now exists for this worthy project, especially from non-academic, non-governmental funding sources, including philanthropists and AI leaders themselves. This is a very promising societal development].

In coming decades, as our natural AIs get increasingly capable, and exhibit many strange failure states (remember how evolutionary processes work, by necessary trial and error), we predict that validating that our AIs have good emotional-rational thinking processes, and adaptive empathy and ethics, will become major areas of academic research, corporate and open design, and regulation. If we can’t make sufficiently emotionally and socially intelligent AIs, we may have to pause our our more complex AI deployments, until our science catches up to our technology. [2025 Note: We’ve already had several frontier models retired because they could not be fixed. We did not have the knowledge or skill to prevent their misbehavior.]

These are not trivial concerns. They are at the heart of the alignment challenge, as our AIs grow increasingly powerful and pervasive in coming years.

. . .

Now we’d like to ask a favor of you, our valued readers:

What have we missed, in this brief overview? What is unclear? Where do you disagree? Who else should we cite? What topics would you like to see discussed next? Who would you like to see as a guest poster or debater? Your feedback is greatly helpful to building our collective intelligence on this vital topic. Thanks for reading.

Natural Alignment - The Future of AI (A Limited Series of Posts)

Natural Intelligence: Growing Emotional-Rational Minds (this post)

Autopoesis: How Life and the Universe Manages Complexity (Coming 2025)

Evolution, Development, Mind and AI Design (Coming 2025)

Evo-Devo Values: How Biological Sentience Manages Complexity (Coming)

Stewarding Sentience: Personal, Group, and Network AI (Coming)

John Smart is a systems theorist, trained under living systems pioneer James Grier Miller at UCSD, and co-founder of the Evo-Devo Universe complex systems research community. Nakul Gupta has a BS in Physics from UCLA, and has recently received an MS in Computer Science from USC.